Decoding the Inner Workings of Claude AI

As artificial intelligence evolves, understanding the intricacies of its operations grows increasingly critical. The latest research from Anthropic highlights significant strides in uncovering the complex mechanisms behind their Claude model’s decision-making capabilities. By exploring how these systems function, we not only enhance AI performance but also gain insights necessary for ensuring their reliability as they interact with the world more autonomously.

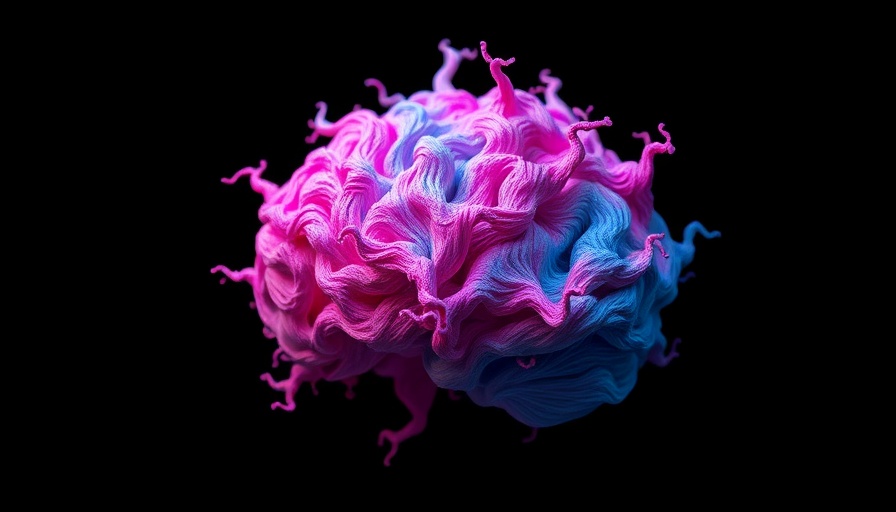

The Challenge of Understanding AI

AI systems, particularly large language models (LLMs) like Claude, often operate in inscrutable ways, likened to black boxes. Traditional programming relied heavily on manual coding with logical rules, making them easier to understand. In contrast, neural networks learn from vast data sets and develop their operational logic, which can be frustratingly opaque. Anthropic’s research aims to peel back these layers, revealing the thought processes driving AI—a crucial endeavor as AI becomes integral in complex tasks across various fields.

Revolutionary Research Approaches

In two recent papers, Anthropic demonstrated a groundbreaking method to interpret AI processes. They tested this on the Claude 3.5 Haiku model, creating a replacement model that mimics Haiku’s operations with clearer internal features. This methodological enhancement allowed researchers to follow the model’s thought process through various tasks, such as reasoning, poetry generation, and problem-solving—a significant move toward making AI's cognitive processes more transparent.

Key Findings: More than Just Prediction

One of the insights garnered from this research dismantles a prevalent myth about AI: that large language models work purely by predicting the next word in a sentence. In reality, the study revealed that Claude’s internal workings involve complex decision-making processes, entailing the selection of features that are language-independent. Before generating a response, the model often organizes its thoughts and concepts abstractly, showcasing a level of cognitive sophistication that fosters deeper understanding.

The Broader Implications for AI and Society

These findings extend beyond just academic curiosity; they possess substantial implications for the AI industry at large. As AI systems become more sophisticated, the potential for misinterpretation grows, necessitating robust frameworks for their deployment. Knowledge gained from Anthropic's research can lay a foundation for more reliable AI, thus empowering industries to use these tools with better confidence and foresight.

The Future of AI Understanding

As we continue to traverse the landscape of AI technology, the quest for transparency and understanding becomes paramount. Research from Anthropic illustrates a commitment to unraveling the complexities of AI's thought processes, a crucial step in the evolution of intelligent systems capable of engaging in complex reasoning. This pursuit will pave the way for developing AI systems that are not only more capable but also more aligned with human objectives and ethical standards.

Add Row

Add Row  Add

Add

Add Row

Add Row  Add

Add

Write A Comment