A New Era of Contract Management: Gavel's Deep Reasoning Mode

In the rapidly evolving landscape of artificial intelligence, Gavel has taken a significant step forward with the launch of its Deep Reasoning Mode in Gavel Exec, an innovative Microsoft Word add-in designed for enhancing contract redlining, drafting, and negotiations. This technology harnesses multiple generative AI models including the powerful GPT-5, aiming to refine how legal professionals manage and negotiate contracts.

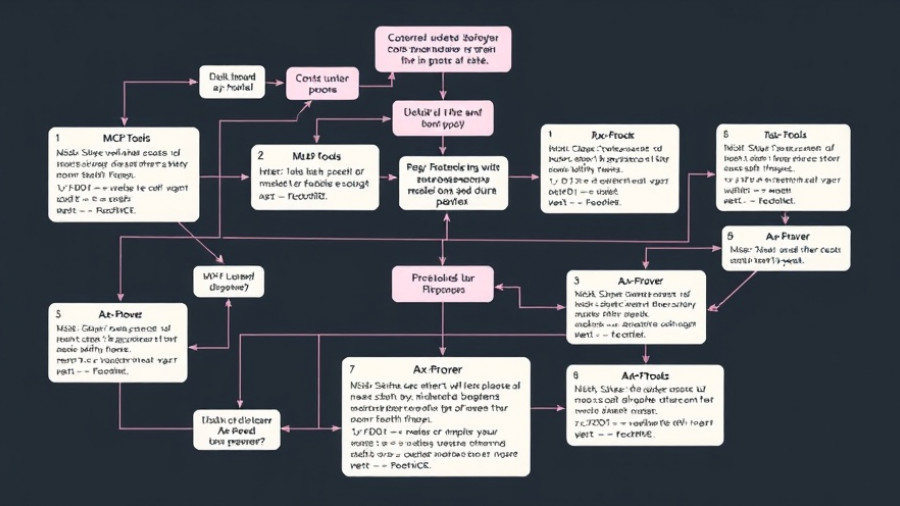

How Deep Reasoning Mode Works

CEO Dorna Moini describes Deep Reasoning Mode as a culmination of extensive training and research. The unique integration of various models specifically tailored for legal documentation sets Gavel Exec apart in the realm of AI-driven tools. Understanding how lawyers typically redline documents, the platform achieves an impressive 80% acceptance rate in redline edits, establishing its utility in practical scenarios.

The Functionality of Multiple AI Models

Moini elaborates on the robust architecture behind Deep Reasoning, where a network of AI agents employs distinct models for tailored tasks. These tasks range from understanding document structures to drafting precise redlines and providing context-sensitive feedback. This multi-faceted approach ensures enhanced performance in various facets of contractual communication.

Benchmarking Against Legal Standards

Continuous benchmarking with licensed lawyers allows Gavel Exec to adapt its model pool based on performance observed during real-world applications. For instance, while models like GPT-5 exhibit superior logical reasoning and factual accuracy, Gavel Exec compensates for each model's limitations by intelligently swapping between them, ensuring optimal outcomes for specific tasks.

Breaking Limits: The Size of Context Windows

Context window size is often a critical element for AI performance, particularly in legal contexts where documents can be lengthy. Moini assures users that, although individual models have their limits, Gavel Exec intelligently segments projects to manage extensive documents seamlessly. This feature enables the application of reasoning across hundreds of pages while referencing multiple external documents simultaneously.

The Specific Benefits of Deep Reasoning Mode

1. Precision in Legal Edits: The Deep Reasoning Mode facilitates surgical redlines that are tailored to both legal standards and negotiation strategies, sidestepping common pitfalls like irrelevant changes.

2. Customizable AI Solutions: Gavel was among the pioneers to let firms mold AI to fit their needs. The introduction of larger context windows in Deep Reasoning broadens this opportunity, enabling consistent application across various documents.

3. Enhanced Contract Analysis: Gavel Exec offers a sophisticated understanding when suggesting alterations, alerting users to potential risks or deviations from market norms.

Future Predictions for AI in Legal Tech

The trajectory of AI in legal technology appears to suggest even greater advancements. As tools like Gavel Exec continue to integrate more complex models and sophisticated algorithms, we may soon witness an evolution in how contracts are approached, negated, and refined. Lawyers of tomorrow could leverage AI not merely as an assistant but as an essential partner in legal negotiations.

Counterarguments: Perspectives on AI in Law

However, the rapid pace of AI integration in the legal realm isn't without skepticism. Critics caution against over-reliance on AI technology, emphasizing the importance of maintaining human oversight in legal matters. Issues related to data privacy, model bias, and ethical considerations remain hot topics of debate.

What This Means for Legal Professionals

For legal professionals, understanding the implications of technologies like Gavel Exec is pivotal. Gaining insight into AI's functionality and adapting to new tools can foster not just job security but enhanced efficiency and productivity within their practice.

In conclusion, Gavel's Deep Reasoning Mode epitomizes the strides being made in the integration of AI into legal proceedings. As the industry embraces these innovations, professionals may find themselves better equipped to navigate the complexities of modern contract law.

Engaging with this technology today can set lawyers apart in an increasingly competitive field.

Add Row

Add Row  Add

Add

Write A Comment