The Launch of ERNIE-4.5-21B-A3B-Thinking: A New Era in AI Reasoning

Baidu's recent unveiling of the ERNIE-4.5-21B-A3B-Thinking model marks a significant milestone in the realm of artificial intelligence, particularly in deep reasoning capabilities. Staying true to its mission of advancing AI technology, Baidu has designed this model to emphasize efficiency without sacrificing performance. As we dive into what makes ERNIE unique, it becomes essential to examine its architecture, capabilities, and the potential impact on the AI landscape.

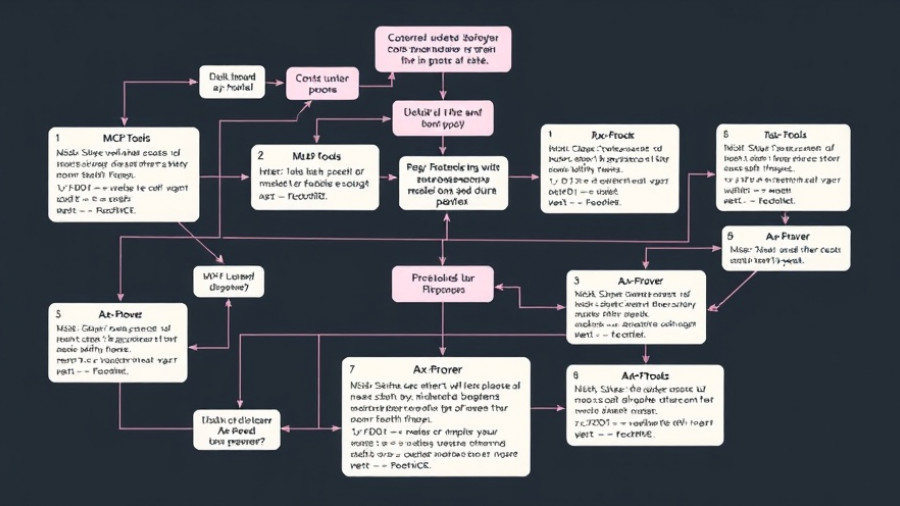

A Revolutionary Mixture-of-Experts (MoE) Architecture

The core of ERNIE-4.5 lies in its innovative Mixture-of-Experts (MoE) architecture. Unlike traditional models that rely on activating all parameters for every task, ERNIE strategically activates only a subset - 3 billion out of its total 21 billion parameters for each token processed. This selective activation not only reduces computational overhead but also concentrates the model's reasoning prowess where it's needed most. This marks a pivotal shift from classic dense model architectures, and aligns with current trends prioritizing computational efficiency and specialized expertise in AI models.

Mastering Long-Context Reasoning

One of the standout features of the ERNIE-4.5-21B-A3B-Thinking model is its ability to handle long-context reasoning, boasting an impressive 128,000-token context length. This capability is not merely a numerical improvement; it enables the model to effectively process and reason through extensive documents, paving the way for complex, multi-step reasoning tasks. For instance, with this model, users can integrate information from lengthy academic papers or correlate data across multiple code files—tasks that were previously challenging for AI to execute efficiently.

Innovative Training Strategies for Enhanced Reasoning

Building the ERNIE model is a deliberate, layered approach. The training consists of multiple stages that incrementally enhance its capabilities. Initially, text-only pretraining sets the foundational language model, beginning with 8,000 tokens and scaling up to the impressive 128,000. The absence of vision training in this variant suggests a focused mission to refine its textual reasoning abilities. Advanced training methodologies such as Supervised Fine-Tuning and Progressive Reinforcement Learning ensure that the model is well-equipped across various reasoning tasks, from mathematics to logic.

Impact on the Future of AI and Society

The release of the ERNIE-4.5-21B-A3B-Thinking model opens doors for incredible advancements in AI applications. With its ability to seamlessly process vast information and interact with complex reasoning tasks, industries ranging from education to software development can benefit profoundly. As organizations increasingly rely on AI to aid decision-making, a model equipped with such reasoning power could redefine productivity and contribute to new innovations in many fields.

The Broader Context of Deep Reasoning AI

This latest development in deep reasoning AI reflects a larger shift in technology where understanding context and making informed decisions is critical. As various sectors adopt similar technologies, it’s crucial for professionals and enthusiasts alike to stay informed about advancements like ERNIE-4.5. Understanding how these models function could be a game-changer, not just for tech-savvy individuals but for anyone interested in the evolving relationship between technology and society.

Conclusion: Embrace the Future of AI

With the introduction of ERNIE-4.5-21B-A3B-Thinking, Baidu demonstrates a commitment to not just enhancing AI capabilities but also simplifying and streamlining operations. As we continue to witness the interplay between advanced AI systems and practical, real-world applications, engaging deeply with these changes will empower individuals and organizations alike. It’s an exciting time in the world of AI, and understanding models like ERNIE could position you to leverage these innovations effectively.

Add Row

Add Row  Add

Add

Write A Comment