Understanding Agentic AI: The Future of Autonomous Decision-Making

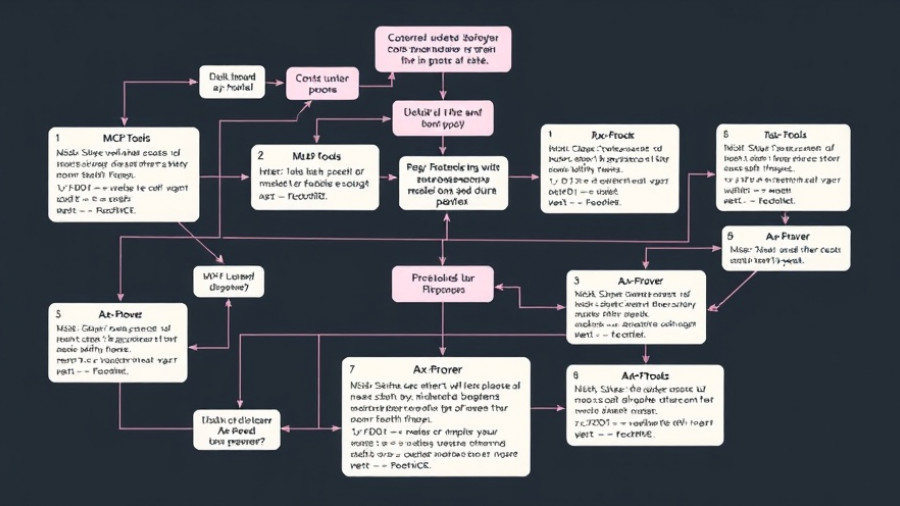

Artificial intelligence is evolving rapidly, advancing from basic machine learning into the realm of what is now being called 'agentic AI.' This new breed of AI entities promises a future where machines perform tasks that require both reasoning and interaction with complex systems. But agentic AI comes with two distinct models that shape its role in our lives: one wherein AI serves as an advisor to humans, and another where AI acts autonomously. This distinction will have profound implications for our society and the economy.

The Advisor Model: Enhancing Human Decision-Making

In the first model, AI acts as a cognitive partner, providing tailored recommendations that empower individuals to make informed choices. This approach strengthens human agency. By supplying contextually relevant, reliable, and customized information, AI can improve outcomes in areas like healthcare, finance, and education while allowing humans to retain control over their decisions. The richer and more nuanced the information provided by AI, the better equipped individuals will be to navigate their complex environments.

The benefit of this model lies in its potential to enhance human decision-making rather than replace it. As philosopher David Hume pointed out, our decision-making is not solely based on logic but often steered by passions and emotions. With AI as a guiding advisor, people can balance their instinctual responses with data-driven insights, improving the quality of decisions across various facets of life.

Autonomous AI: A Double-Edged Sword

On the opposite end of the spectrum lies the autonomous AI model, which envisions a future where machines take actions on behalf of humans. While the promise of efficiency and minimized human error is enticing, this model raises significant concerns. For instance, the delegation of decision-making to autonomous AI might lead to automation of jobs, potentially widening the economic divide and diminishing the sense of agency that humans have over their lives.

Moreover, this autonomous aspect brings forward ethical implications regarding accountability and transparency in AI's decision-making processes. If an AI system makes a flawed decision, who takes responsibility? The challenge lies in ensuring that AI systems operate within parameters that reflect human values, rights, and diverse perspectives.

A Balancing Act: Navigating the Future of Agentic AI

The tech industry must tread carefully between these two models of agentic AI, primarily to maintain the delicate social fabric that binds us together. If autonomous AIs automate too many decisions, opportunities for personal growth and fulfillment may diminish, leading to a society where individuals feel increasingly removed from their own lives.

Furthermore, economic implications are profound. As industries adapt to technology progressing into greater autonomy, understanding the nuances of cooperation versus conflict becomes essential. Many areas in which humans interact involve negotiations characterized by inherent conflict. These dynamics could be disrupted if negotiations are handled solely by an AI without empathy or understanding of underlying human motives.

Looking Ahead: The Path Forward

The future of agentic AI is not predetermined. To reap its benefits while mitigating risks, stakeholders must actively engage in discussions about its direction. This will require collaboration among technologists, ethicists, policy-makers, and the public to define the ethical frameworks and operational standards that govern AI systems.

Ultimately, the trajectory of agentic AI could shape our societies in unexpected ways. Constant re-evaluation of the roles we assign to these intelligent systems will play a critical role in ensuring they serve to enhance and never replace the rich tapestry of human decision-making.

Final Thoughts: Embracing Hope Amidst Uncertainty

As we stand on the brink of an AI-infused future, it's essential to remain focused on human agency and the moral considerations tied to AI. We can harness the potential of agentic AI to supercharge our capabilities while safeguarding values that make us inherently human. As an AI enthusiast, engaging with these discussions equips you to contribute meaningfully to the ongoing evolution of this transformative technology.

Add Row

Add Row  Add

Add

Write A Comment